|

An effector is any device that

affects the environment. Robots control their effectors, which are

also known as end effectors. Effectors include legs, wheels, arms,

fingers, wings and fins. Controllers cause the effectors to produce

desired effects on the environment. An actu ator

is the actual mechanism that enables the effector to execute an

action. Actuators typically include electric motors, hydraulic or

pneumatic cylinders, etc. The terms effector and actuator are often

used interchangeably to mean "whatever makes the robot take an

action." This is not really proper use. Actuators and effectos are not

the same thing. And we'll try to be more precise in the class. Most

simple actuators control a single degree of freedom, i.e., a

single motion (e.g., up-down, left-right, in-out, etc.). A motor shaft

controls one rotational degree of freedom, for example. A sliding part

on a plotter controls one translational degree of freedom. How many

degrees of freedom (DOF) a robot has is going to be very important in

determining how it can affect its world, and therefore how well, if at

all, it can accomplish its task. Just as we said many times before

that sensors must be matched to the robot's task, similarly,

effectors must be well matched to the robot's task also. ator

is the actual mechanism that enables the effector to execute an

action. Actuators typically include electric motors, hydraulic or

pneumatic cylinders, etc. The terms effector and actuator are often

used interchangeably to mean "whatever makes the robot take an

action." This is not really proper use. Actuators and effectos are not

the same thing. And we'll try to be more precise in the class. Most

simple actuators control a single degree of freedom, i.e., a

single motion (e.g., up-down, left-right, in-out, etc.). A motor shaft

controls one rotational degree of freedom, for example. A sliding part

on a plotter controls one translational degree of freedom. How many

degrees of freedom (DOF) a robot has is going to be very important in

determining how it can affect its world, and therefore how well, if at

all, it can accomplish its task. Just as we said many times before

that sensors must be matched to the robot's task, similarly,

effectors must be well matched to the robot's task also.

In

general, a free body in space as 6 DOF: three for translation (x,y,z),

and three for orientation/rotation (roll, pitch, and yaw). We'll go

back to DOF in a bit. You need to know, for a given effector (and

actuator/s), how many DOF are available to the robot, as well as how

many total DOF any given robot has. If there is an actuator for every

DOF, then all of the DOF are controllable. Usually not all DOF are

controllable, which makes robot control harder. A car has 3 DOF:

position (x,y) and orientation (theta). But only 2 DOF are

controllable: driving: through the gas pedal and the forward-reverse

gear; steering: through the steering wheel. Since there are more DOF

than are controllable, there are motions that cannot be done, like

moving sideways (that's why parallel parking is hard). We need to

make a distinction between what an actuator does (e.g., pushing the

gas pedal) and what the robot does as a result (moving forward). A car

can get to any 2D position but it may have to follow a very

complicated trajectory. Parallel parking requires a discontinuous

trajectory w.r.t. velocity, i.e., the car has to stop and go. When

the number of controllable DOF is equal to the total number of DOF on

a robot, it is holonomic(for more information about holonomic). If the number of controllable DOF is smaller than total DOF,

the robot is non-holonomic. If the number of controllable DOF is

larger than the total DOF, the robot is redundant. A human arm has 7

DOF (3 in the shoulder, 1 in the elbow, 3 in the wrist), all of which

can be controlled. A free object in 3D space (e.g., the hand, the

finger tip) can have at most 6 DOF! So there are redundant ways of

putting the hand at a particular position in 3D space. This is the

core of why manipulations is very hard!

Two basic ways of using effectors:

These divide robotics into two mostly separate

categories:

-

mobile robotics

-

manipulator robotics

Mobility end effectors are discussed in more detail in the

mobility section of this web site.

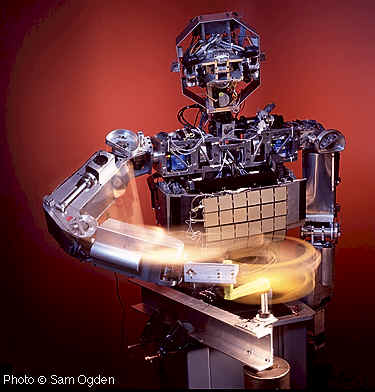

In contrast to locomotion, where the body of the robot is moved

to get to a particular position and orientation, a manipulator moves

itself typically to get the end effector (e.g., the hand, the

finger, the fingertip) to the desired 3D position and orientation. So

imagine having to touch a specific point in

3D space with the tip of your index finger; that's what a typical

manipulator has to do. Of course, largely manipulators need to grasp

and move objects, but those tasks are extensions of the basic reaching

above. The challenge is to get there efficiently and safely. Because

the end effector is attached to the whole arm, we have to worry about

the whole arm; the arm must move so that it does not try to violate

its own joint limits and it must not hit itself or the rest

of the robot, or any other obstacles in the environment. Thus, doing

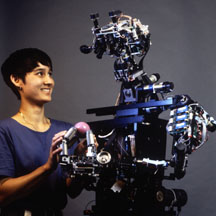

autonomous manipulation is very challenging. Manipulation was first

used in tele-operation, where human operators would move artificial

arms to handle hazardous materials. It turned out that it was quite

difficult for human operators to learn how to tele-operate complicated

arms (such as duplicates of human arms, with 7 DOF). One alternative

today is to put the human arm into an exo-skeleton (see lecture 1), in

order to make the control more direct. Using joy-sticks, for example,

is much harder for high DOF. Why is this so hard? Because even as we

saw with locomotion, there is typically no direct and obvious link

between what the effector needs to do in physical space and what the

actuator does to move it. In general, the correspondence between

actuator motion and the resulting effector motion is called

kinematics. In order to control a manipulator, we have to know

its kinematics (what is attached to what, how many joints there are,

how many DOF for each joint, etc.). We can formalize all of this

mathematically, and get an equation which will tell us how to convert

from, say, angles in each of the joints, to the Cartesian positions of

the end effector/point. This conversion from one to the other is

called computing the manipulator kinematics and inverse kinematics.

3D space with the tip of your index finger; that's what a typical

manipulator has to do. Of course, largely manipulators need to grasp

and move objects, but those tasks are extensions of the basic reaching

above. The challenge is to get there efficiently and safely. Because

the end effector is attached to the whole arm, we have to worry about

the whole arm; the arm must move so that it does not try to violate

its own joint limits and it must not hit itself or the rest

of the robot, or any other obstacles in the environment. Thus, doing

autonomous manipulation is very challenging. Manipulation was first

used in tele-operation, where human operators would move artificial

arms to handle hazardous materials. It turned out that it was quite

difficult for human operators to learn how to tele-operate complicated

arms (such as duplicates of human arms, with 7 DOF). One alternative

today is to put the human arm into an exo-skeleton (see lecture 1), in

order to make the control more direct. Using joy-sticks, for example,

is much harder for high DOF. Why is this so hard? Because even as we

saw with locomotion, there is typically no direct and obvious link

between what the effector needs to do in physical space and what the

actuator does to move it. In general, the correspondence between

actuator motion and the resulting effector motion is called

kinematics. In order to control a manipulator, we have to know

its kinematics (what is attached to what, how many joints there are,

how many DOF for each joint, etc.). We can formalize all of this

mathematically, and get an equation which will tell us how to convert

from, say, angles in each of the joints, to the Cartesian positions of

the end effector/point. This conversion from one to the other is

called computing the manipulator kinematics and inverse kinematics.

The process of converting the Cartesian (x,y,z) position into a

set of joint angles for the arm (thetas) is called inverse kinematics.

Kinematics are the rules of what is attached to what, the body

structure. Inverse kinematics is computationally intense. And the

problem is even harder if the manipulator (the arm) is redundant.

Manipulation involves

Manipulators are effectors. Joints connect

parts of manipulators. The most common joint types are:

These joints provide the DOF for an effector, so

they are planned carefully.

Robot manipulators can have one or more of each of those

joints. Now recall that any free body has 6 DOF; that means in order

to get the robot's end effector to an arbitrary position and

orientation, the robot requires a minimum of 6 joints. As it turns

out, the human arm (not counting the hand!) has 7 DOF. That's

sufficient for reaching any point with the hand, and it is also redundant, meaning that there are multiple ways in which any point can

be reached. This is good news and bad news; the fact that there are

multiple solutions means that there is a larger space to search

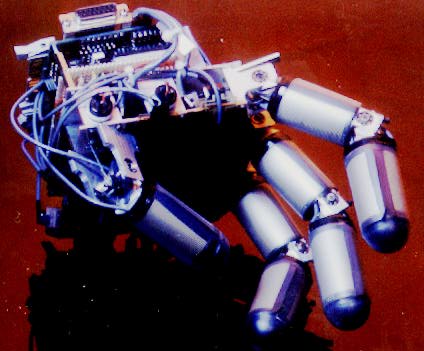

through to find the best solution. Now consider end effectors. They

can be simple pointers (i.e., a stick), simple 2D grippers,

screwdrivers for attaching tools (like welding guns, sprayer, etc.),

or can be as complex as the human hand, with variable numbers of

fingers and joints in the fingers. Problems like reaching and

grasping in manipulation constitute entire subareas of robotics and

AI. Issues include: finding grasp-points (COG, friction, etc.);

force/strength of grasp; compliance (e.g., in sliding, maintaining

contact with a surface); dynamic tasks (e.g., juggling, catching).

Other types of manipulation, such as carefully controlling force, as

in grasping fragile objects and maintaining contact with a surface

(so-called compliant motion), are also being actively

researched. Finally, dynamic manipulation tasks, such as juggling,

throwing, catching, etc., are already being demonstrated on robot

arms.

redundant, meaning that there are multiple ways in which any point can

be reached. This is good news and bad news; the fact that there are

multiple solutions means that there is a larger space to search

through to find the best solution. Now consider end effectors. They

can be simple pointers (i.e., a stick), simple 2D grippers,

screwdrivers for attaching tools (like welding guns, sprayer, etc.),

or can be as complex as the human hand, with variable numbers of

fingers and joints in the fingers. Problems like reaching and

grasping in manipulation constitute entire subareas of robotics and

AI. Issues include: finding grasp-points (COG, friction, etc.);

force/strength of grasp; compliance (e.g., in sliding, maintaining

contact with a surface); dynamic tasks (e.g., juggling, catching).

Other types of manipulation, such as carefully controlling force, as

in grasping fragile objects and maintaining contact with a surface

(so-called compliant motion), are also being actively

researched. Finally, dynamic manipulation tasks, such as juggling,

throwing, catching, etc., are already being demonstrated on robot

arms.

Having talked about navigation and manipulation, think about

what types of sensors (external and proprioceptive) would be useful

for these general robotic tasks. Proprioceptive sensors sense

the robot's actuators (e.g., shaft encoders, joint angle sensors,

etc.); they sense the robot's own movements. You can think of them as

perceiving internal state instead of external state. External sensors

are helpful but not necessary or as commonly used. |